“Short-leg” of RISC-V | RISC-V 的小短腿

Preface

I came across some code performance issues only on RISC-V recently. Their root cause is the short offset of branch instruction on RISC-V. I am writing a blog post to share the issues and discuss possible solutions for compiler and CPU architecture in the future.

Branch offset length on other ISAs

On x86-64, JMP instructions has signed 8 bits or 32 bits relative offset. Thus, we can jump to +-2GiB code space in a single conditional branch instruction.

On aarch64, B.cond instructions has signed 19 bits relative offset. The PC must be aligned to 4Bytes, so relative addresses are encoded as "imm19" times 4. Thus, we can jump to +-1MiB code space in a single conditional branch instruction.

On RISC-V (whatever 32I/64I), branch instructions has signed imm[12:1] offset. The PC must be aligned to 2Bytes. Thus, we can only jump to +- 4KiB code space in a single conditional branch instruction.

On MIPS32, branch instructions has signed 16 bits offset. The PC must be aligned to 4Bytes, so relative addresses are encoded as "imm16" times 4. Thus, we can jump to +-128KiB code space in a single conditional branch instruction.

In conclusion, RISC-V has the shortest branch offset (4K) among x86-64 (2G) and aarch64(1M) and even MIPS32(128K).

What will happen if the branch offset is too small?

A case was shown here using loop unroll: https://godbolt.org/z/6jf5Pon3h

int a[64], b[64], c[64], d[64];

int e[64], f[64], g[64], h[64];

int foo(bool do_memset) {

if (do_memset) { [[likely]] // some code > 4KB

for (int j=0;j<8;j++)

for (int i=0;i<8;i++) a[j*8+i] *=2333;

for (int j=0;j<8;j++)

for (int i=0;i<8;i++) b[j*8+i] *=2333;

for (int j=0;j<8;j++)

for (int i=0;i<8;i++) c[j*8+i] *=2333;

for (int j=0;j<8;j++)

for (int i=0;i<8;i++) d[j*8+i] *=2333;

for (int j=0;j<8;j++)

for (int i=0;i<8;i++) e[j*8+i] *=2333;

for (int j=0;j<8;j++)

for (int i=0;i<8;i++) f[j*8+i] *=2333;

for (int j=0;j<8;j++)

for (int i=0;i<8;i++) g[j*8+i] *=2333;

for (int j=0;j<8;j++)

for (int i=0;i<8;i++) h[j*8+i] *=2333;

return 0;

}

else return 1;

}Then both GCC 14.2.0 and LLVM 18.1.0 will generate "branch + jump" to skip the if-true block.

foo(bool):

mv a1, a0

li a0, 1

bnez a1, .LBB0_1

j .LBB0_2

.LBB0_1:

li a0, 0

.Lpcrel_hi0:

auipc a1, %pcrel_hi(a)

addi a2, a1, %pcrel_lo(.Lpcrel_hi0)

lw a3, 0(a2)

lui a1, 1

addi a1, a1, -1763

lw a4, 4(a2)

mul a3, a3, a1

sw a3, 0(a2)

lw a3, 8(a2)

mul a4, a4, a1

...But if we have sufficient length on branch offset (emulated by reducing the number of "for" blocks), the code will be:

foo(bool):

mv a1, a0

li a0, 1

beqz a1, .LBB0_2

li a0, 0

.Lpcrel_hi0:

auipc a1, %pcrel_hi(a)

addi a1, a1, %pcrel_lo(.Lpcrel_hi0)

lw a3, 0(a1)

lui a2, 1

addi a2, a2, -1763

lw a4, 4(a1)

mul a3, a3, a2

sw a3, 0(a1)

lw a3, 8(a1)

mul a4, a4, a2

...What will happen if the code running on real CPU?

The point is: Untaken branch does not require Branch Preditor to work.

In this case, without sufficient offset in branch instruction, the compiler will convert an "unlikely branch" to "likely branch + unlikely jump" cause the branch predictor must know the first branch should be taken and should have the branch target address hit in the branch target buffer.

Suppose a workload with so many branches without locality overflows the storage size of the branch predictor in the CPU chip itself. In that case, code like this will perform very poorly on an trivial OoO CPU because of the frequent front and backend flush, and there is no chance of using OoO to have a better throughput to tolerate long latency memory access.

A very bad case

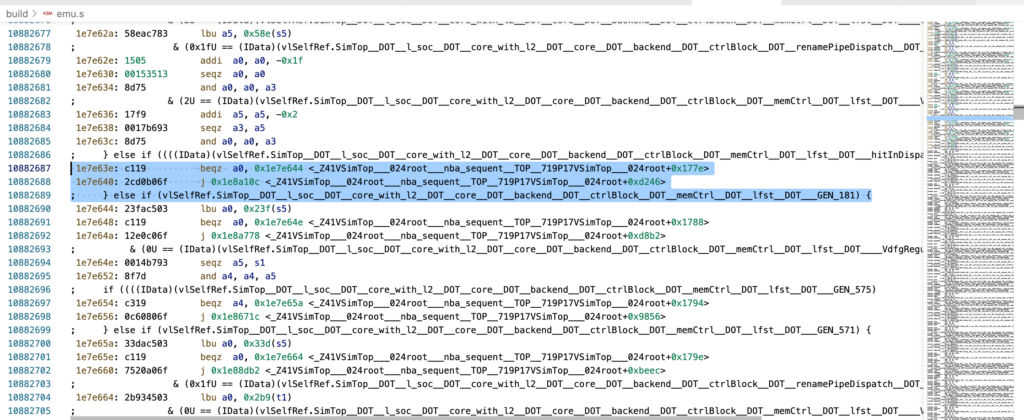

Verilator generated code + clang PGO

Features:

- Widespread branch (nearly every 10 instructions)

- Huge code size on large designs

- No locality

- Huge memory footprint, many high latency memory access

- Branch direction can be easily determined by PGO

But the code on RISC-V is like:

Possible Solutions

Our Goal: Reduce number of likely taken branch instruction

ISA Side

Qualcomm proposed an extension called Xqcilb (Long Branch) that supports 32-bit immediate in jump instructions for RV32 . This extension is expected to reduce the number of instructions required for long jumps. However, we still lack support for 32-bit immediate branches.

CPU Side

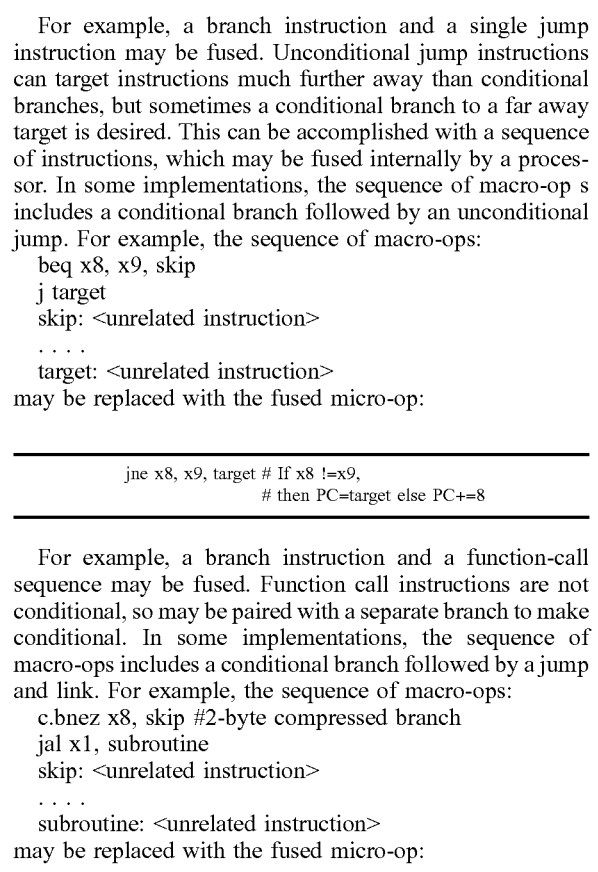

Intuition: Fuse branch + jump to a single branch with long offset in frontend

Related work: Macro-op fusion by SiFive

And the patent from SiFive shows this case even in 2018:

Compiler Side

Since we have no conditional branch to register on RISC-V, solutions like jump table island on LLVM on ARM are not possible.

But we can have a jump table island using branch instruction itself. A simple solution can be placing an island based on jump instruction in every 4KB code region. However, if the processor already has macro-op fusion, emitting code in this way will have more code size and cause more jump instructions to be generated and executed.

“On RISC-V (whatever 32I/64I), branch instructions has signed imm[13:1] offset. The PC must be aligned to 2Bytes. Thus, we can only jump to +- 4KiB code space in a single conditional branch instruction.”

A small typo, it should be “imm[12:1]”.

Thanks for pointing that out. Fixed.

感谢作者,所以本质原因是分支腿短,跳不出嵌套循环地址空间对吗?

不是循环地址空间,是跳不到放在最后的unlikely的代码空间。